Visual Object Tracking

Contact: Simone Frintrop

Visual object tracking is the task of tracking a predefined target over several frames of an image sequence. We are especially interested in approaches that are applicable to new objects without a long training phase (adhoc online learning) and that are able to work in real-time on a mobile platform. This might be a robot, a hand-held camera or a camera mounted on a car.

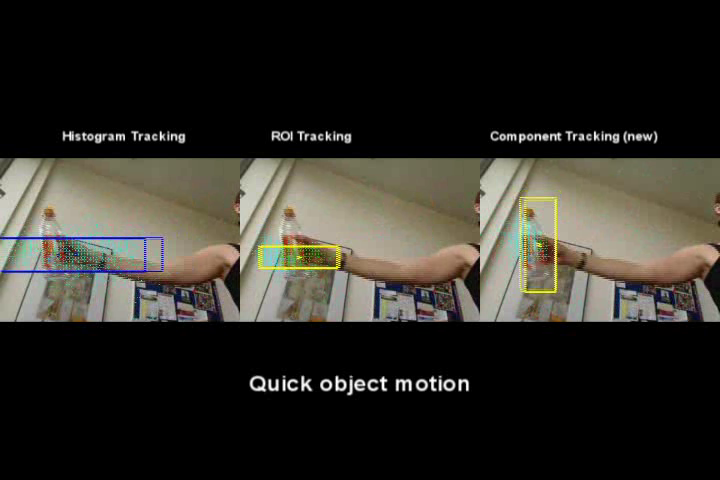

Here, we present a particle filter based tracking approach with a cognitive observation model [Frintrop, ICRA 2010 (pdf)]. The core of the approach is a component-based descriptor that flexibly captures the structure and appearance of a target. The descriptor can be learned quickly from a single training image. Here a video with some of our tracking results:

[avi, 17 MB]

[avi, 17 MB]

We have also applied this technique to person tracking from a mobile robot [Frintrop et al., Int'l J. of Social Robotics 2010 (pdf)]. Here you can see the robot Blücher that we used for our experiments, the environment at the FGAN research institute at which we performed the experiments, and an example frame of the tracking results. The green and cyan dots on the image show the particles, the rectangle denotes the estimated target position.

This work is an extension of our [Frintrop et al., ICRA Workshop on People Detection and Tracking 2009 (pdf)] paper, in which we used a simpler observation model without components.

In previous work, we have presented a visual tracking system based on the biologically motivated attention system VOCUS. [Frintrop,Kessel ICRA 2009 (pdf)]. VOCUS has a top-down part that enables it to actively search for target objects in a frame. We have tracked balls, cups, cats, faces and persons, all with the same algorithm with the same parameter set. Here a video with some of the tracking results (quantitative results in the ICRA paper):

[avi, 16 MB]

[avi, 16 MB]

Next, we show the feature vectors which were computed by the attention system VOCUS and used for the most salient region (MSR) tracking. The first 10 rows show the 'feature types' or 'feature map' values, the last three rows the 'feature channels' or 'conspicuity map' values. The columns correspond to the targets of the ICRA 2009 paper.

- CamShift: [Person left] [Person right]

- MSR Tracker: [Person left] [Person right]

- Partikel Tracker: [Person left] [Person right]

The above papers are extensions of parts of the diploma thesis of Markus Kessel. Some additional results of his thesis are shown in the following videos. 'CamShift' is the CamShift algorithm of Bradski (1998), it was used as a comparison method here (for a quantitative comparison see the ICRA 2009 paper). The MSR (most salient region) tracker and the particle tracker have been developed in this thesis: